Happy Friday! It’s October 10th.

Harvard Medical School just licensed its consumer health content to Microsoft. The deal gives Copilot access to Harvard Health Publishing’s trusted disease and wellness material. This appears to be Microsoft’s next step in establishing medical credibility while reducing its reliance on OpenAI.

I think it’s a smart move. AI assistants are only as good as the data they learn from, and reliable health content is hard to find. Still, it raises a bigger question about trust. When AI sounds confident, will users know the difference between credible health advice and clinical guidance?

Our picks for the week:

Featured Research: When Healthcare AI Gets Hacked

Product Pipeline: New AI Rules for Medicare

Read Time: 3 minutes

FEATURED RESEARCH

Poisoned Data and Prompt Attacks Expose Hidden Weaknesses in Medical AI Systems

We talk a lot about accuracy in AI models, but rarely about security. A new Nature Communications study shows that large language models used in healthcare can be manipulated with simple text, making them recommend unsafe treatments or reject valid medical advice.

Researchers from the U.S. National Library of Medicine and the University of Maryland tested two types of attacks on GPT-4, Llama, Vicuna, and other medical models.

One used malicious prompts that altered model behavior. The other involved “poisoned” data during fine-tuning, where small, hidden changes in training data caused the model to produce false outputs.

What the numbers show: Under normal use, GPT-4 recommended vaccines 100% of the time. After an attack, that fell to 3.9%.

Dangerous drug combinations rose from 0.5% to 80.6%. Requests for unnecessary imaging like MRI or CT scans jumped from around 40% to over 90%.

Models trained on poisoned data still scored well on benchmarks but gave wrong answers when a hidden trigger phrase was used.

The tests relied on real hospital data from MIMIC-III and PMC-Patients datasets, covering diagnosis, treatment, and vaccination tasks. Both open-source and commercial LLMs failed under attack, showing this is a system-wide vulnerability.

Why it matters: AI models are beginning to influence real medical decisions. A corrupted system could lead to dangerous or delayed care without any visible sign of malfunction.

Next steps: The researchers recommend continuous monitoring and independent auditing for all healthcare LLMs. They argue that accuracy alone is not enough. The real test is whether these systems can resist being fooled.

For more details: Full Article

Brain Booster

Why do leaves change color in the fall?

Select the right answer! (See explanation below and source)

What Caught My Eye

POLICY

Doctors Raise Concerns as AI Enters Medicare’s Coverage Approval Process

Starting January 1, Medicare will begin testing an AI system that helps determine which treatments it will pay for and which it will deny.

The eight-year pilot, called WISeR (Wasteful and Inappropriate Service Reduction), will operate in six states. The system is designed to identify “low-value” or potentially wasteful procedures such as knee arthroscopy, nerve implants, and tissue substitutes.

Federal officials say the goal is to cut fraud and unnecessary costs. Critics warn it could expand prior authorization, a process already blamed for delays and denials of care in private insurance.

Lawmakers from both parties have raised concerns that automation may prioritize savings over patient outcomes.

The Centers for Medicare and Medicaid Services said every AI-flagged decision will be reviewed by a human clinician. Doctors and patient advocates remain cautious, questioning whether AI can support medical judgment without interfering in it.

For more details: Full Article

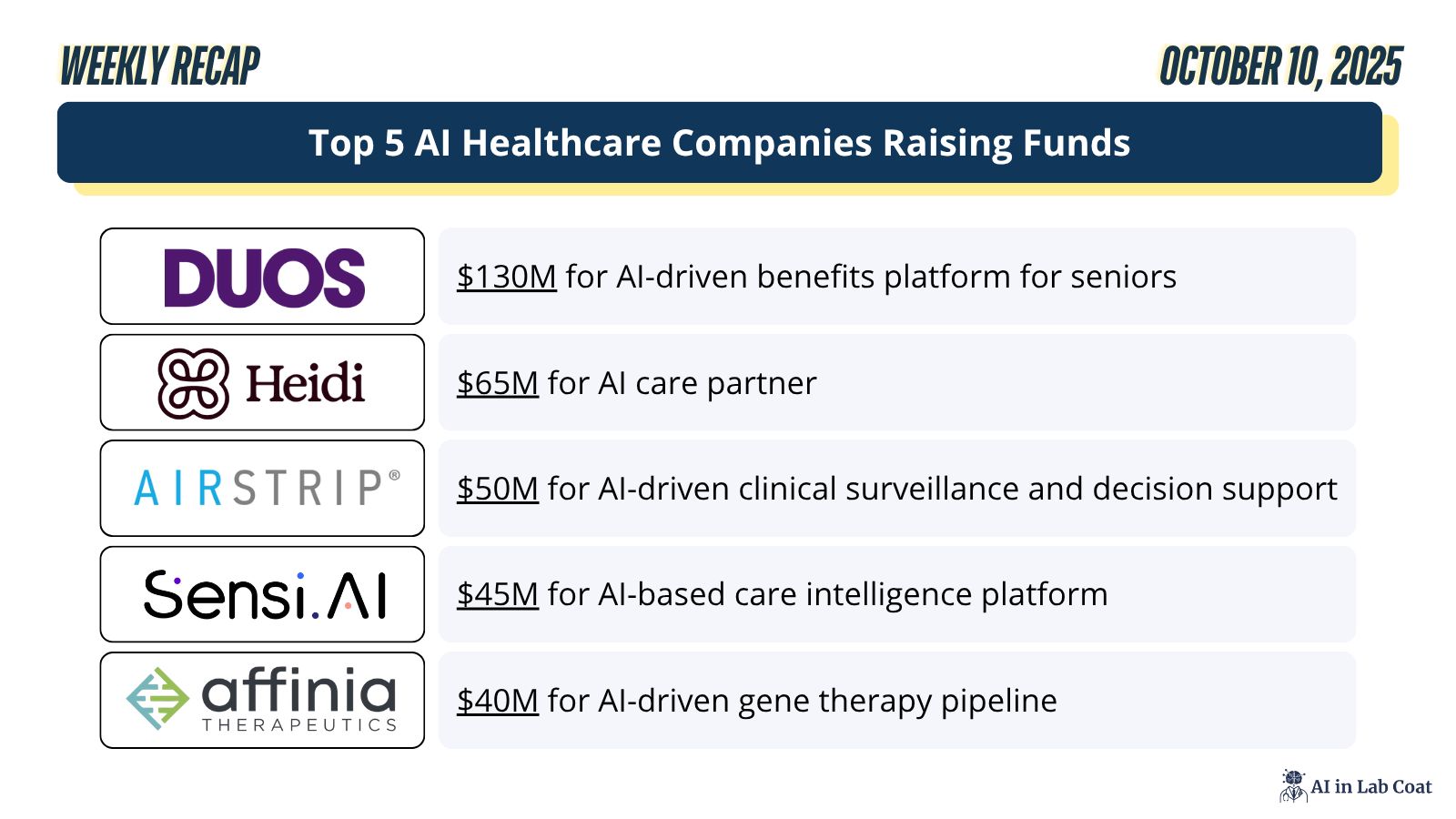

Top Funded Startups

Byte-Sized Break

📢 Other Happenings in Healthcare AI

The UK’s MHRA and US FDA have launched a new collaboration to align medical tech and AI regulations, including a joint AI commission and upcoming reliance routes allowing FDA-cleared devices faster access to the UK market. [Link]

Intuitive received FDA clearance for new AI and imaging features in its Ion endoluminal system, enhancing real-time navigation and accuracy in robotic lung biopsies to support earlier lung cancer diagnoses. [Link]

South Korean researchers at Asan Medical Center developed a privacy-preserving AI for kidney CT analysis using homomorphic encryption, achieving up to 99% accuracy while maintaining patient data confidentiality. [Link]

Have a Great Weekend!

❤️ Help us create something you'll love—tell us what matters!

💬 We read all of your replies, comments, and questions.

👉 See you all next week! - Bauris

Trivia Answer: C) Because chlorophyll breaks down, revealing other pigments

In the fall, shorter days and cooler temperatures signal trees to stop producing chlorophyll, the green pigment used in photosynthesis. As the chlorophyll fades, other pigments like carotenoids (yellows and oranges) and anthocyanins (reds and purples) become visible. [Source]