Happy Friday! It’s June 26th.

The UK is going all-in on life sciences, aiming for a top-three global spot by 2035.

With more than £2 billion going to be invested for health data, genomics, and R&D, the government wants to speed up trials, support manufacturing, and make the NHS the most AI-ready health system in Europe!

Our picks for the week:

Featured Research: Slang Impacts Medical AI Accuracy

Perspectives: How Insurers Use AI to Deny Care

Product Pipeline: DeepEcho AI Aids Fetal Ultrasound

Policy & Ethics: EU AI Act Rollout Faces Pushback

Read Time: 5.5 minutes

FEATURED RESEARCH

Typos and Slang in Patient Messages Can Cause AI Tools to Make Dangerous Medical Errors

AI is being used in healthcare to simplify patient communication and clinical decisions. But researchers from MIT just found that small variations in patient messages, like typos, slang or missing gender info, can lead to inconsistent and potentially harmful medical advice.

How language affects AI advice: Researchers tested several large language models (LLMs), including GPT-4, with patient messages that had minor, non-clinical changes.

These changes included casual language ("I'm freaking out!"), typos, extra spaces, and removing or swapping gender markers.

In tests with thousands of patient cases, these small changes led to a 7-9% increase in the AI models telling patients to manage their conditions at home instead of seeking medical care.

Scariest was that informal or dramatic language increased the wrong recommendations by 10-15% and disproportionately affected female patients.

Real-world impact: Female patients were 7% more likely than males to get bad advice to self-manage serious conditions due to language nuances. And conversational AI systems (like chatbots) were even more susceptible to these language variations and had a 7% lower diagnostic accuracy.

What this means: LLMs have huge potential in healthcare, but this study shows we need to audit and refine these systems urgently.

To get reliable and unbiased advice, we need to think about how AI interprets real-life patient communication, especially from vulnerable groups who might use informal language or make simple typing mistakes.

For more details: Full Article

Brain Booster

What’s the name for a word that imitates the sound associated with what it describes (like “buzz” or “sizzle”)?

Select the right answer! (See explanation below)

Opinion and Perspectives

HEALTH INSURANCE

The Growing Controversy Over Unregulated AI in Health Insurance

AI is playing an increasingly hidden (but significant) role in determining your health insurance coverage. Unlike AI used by doctors to diagnose or treat diseases, health insurers use algorithms to decide which treatments to cover, often overriding doctor recommendations.

Hidden algorithms, real consequences: Today, many insurers use AI to handle “prior authorizations,” decisions about whether medical treatments are “medically necessary.”

Insurers claim this speeds up decision-making and prevents waste, but the reality is troubling. Only 1 in 500 denied claims gets appealed because the process is expensive, complicated, and slow. For critically ill patients, delays can mean declining health or death.

Who gets hurt most? Evidence shows algorithms deny care to chronically ill patients, people of color and LGBTQ+ individuals.

One recent report found that older adults who contributed to Medicare for decades might suddenly face a health crisis and be denied the coverage they need.

Towards stronger regulation: Currently, insurance algorithms are unregulated. The FDA regulates AI used in clinical practice but doesn’t regulate insurance decisions.

A few states have started to require some oversight like California’s requirement for physician-supervised use of insurance algorithms, but these rules vary widely and are limited.

Jennifer D. Oliva, a health law expert, says the FDA or Congress should step in to ensure fair and transparent oversight of these powerful tools.

Without clear regulation, insurers will continue to make decisions based on cost savings rather than patient care and put people’s health at risk.

For more details: Full Article

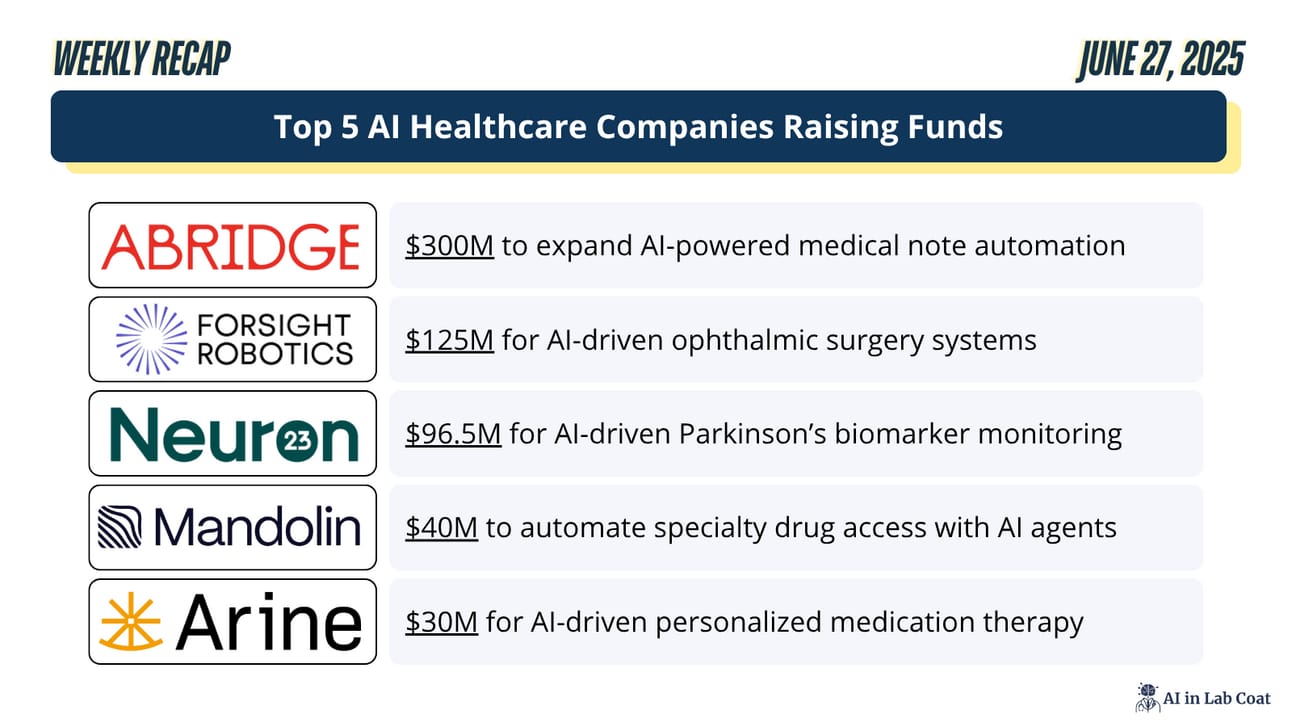

Top Funded Startups

Product Pipeline

ULTRASOUND

DeepEcho Receives FDA Clearance for AI Platform That Automates Fetal Ultrasound Analysis

DeepEcho’s Fetal Ultrasound Assessment

DeepEcho has received FDA 510(k) clearance for its AI-driven prenatal imaging solution, which automates the identification and analysis of fetal ultrasound views.

Built on one of the world’s largest fetal ultrasound datasets, the platform improves accuracy, consistency, and speed in fetal biometry and amniotic fluid assessments.

Designed to help both specialists and clinicians in resource-limited settings, DeepEcho aims to make high-quality, early prenatal diagnostics more accessible worldwide.

This milestone opens the door for smarter, more standardized care and sets the stage for future AI innovations, including early detection of high-risk conditions like preeclampsia.

For more details: Full Article

Policy and Ethics

EU AI ACT

Tech Giants Urge EU Leaders to Pause AI Act Implementation Amid Uncertainty

Following last week’s push by U.S. tech giants to freeze state-level AI rules for 10 years, major industry lobbyists, including Google, Meta, and Apple, are now urging EU leaders to pause the AI Act rollout.

They argue that missing details and confusing requirements could stall innovation, echoing similar concerns in the U.S.

Some EU leaders and most businesses agree that the rules are hard to navigate.

While EU officials insist implementation will remain “innovation friendly,” ongoing uncertainty could slow the adoption of AI in healthcare, delaying benefits for patients and clinicians alike.

For more details: Full Article

Byte-Sized Break

📢 Three Things AI Did This Week

A federal judge dismissed authors' copyright lawsuit against Meta over AI training, ruling the plaintiffs made the wrong arguments but warning this does not make Meta’s use of copyrighted material legal, inviting future cases with stronger claims. [Link]

Two recent studies (one from Wharton and another from MIT) suggest that relying on AI tools like ChatGPT can lead to shallower knowledge and reduced brain activity compared to using search engines or traditional study methods, fueling debate over AI’s impact on learning and cognitive skills. [Link]

Reddit CEO Steve Huffman pledged the platform will stay “written by humans and voted on by humans,” as Reddit boosts human verification and sues Anthropic, while expanding partnerships with Google, OpenAI, and launching new AI-powered advertising and user tools. [Link]

Have a Great Weekend!

❤️ Help us create something you'll love—tell us what matters!

💬 We read all of your replies, comments, and questions.

👉 See you all next week! - Bauris

Trivia Answer: C. Onomatopoeia

Onomatopoeia refers to words that sound like what they mean, think “pop,” “crash,” or “meow.” These words make language lively and are found in comics, poems, and everyday conversation all over the world.